Applications

Each increase in computing power enables even deeper or more detailed insights into the climate system. With the increase in computing power, main memory and storage capacity, users of the DKRZ system can now perform simulations with global climate and Earth system models at a higher resolution than before. With a grid resolution of 2.5 km or less, models allow a purely physical representation of important small-scale climate processes, whereas these have to be parameterized in the much coarser global models used so far. One example is the explicit representation of clouds and precipitation now possible in the new models (see Figure 1). Furthermore, small-scale interactions between atmosphere, ocean and the other parts of the Earth system can also be taken into account.

Figure 1: Visualizations of clouds on a February day in simulations with a resolution of about 80 km, which is common for CMIP6 simulations (here: MPI-ESM HR, left) and in the resolution of 2.5 km enabled by ESiWACE (here: ICON R2B10, right). While the CMIP6 model captures large-scale cloud formation in the Caribbean, the high-resolution ICON simulation additionally represents the details of cloud structures and thus the behavior of different cloud types. With the much more detailed representation of the atmospheric circulation, drastically improved climate predictions are expected as soon as sufficiently long time periods can be simulated. The weather situation of both simulations differs because the models were initialized with different starting data. (Figure: Florian Ziemen, DKRZ).

Among many other new applications with different models and resolutions, the goal of projecting possible climate changes for the course of this century and investigating them for different scenarios with the high-resolution models described above is now attainable. Until now, simulations with such high-resolution climate models were only possible for very short time periods of a few months. Longer simulation periods require significantly more computing time and storage volume - which DKRZ can now provide through Levante.

Technical Specifications of Levante

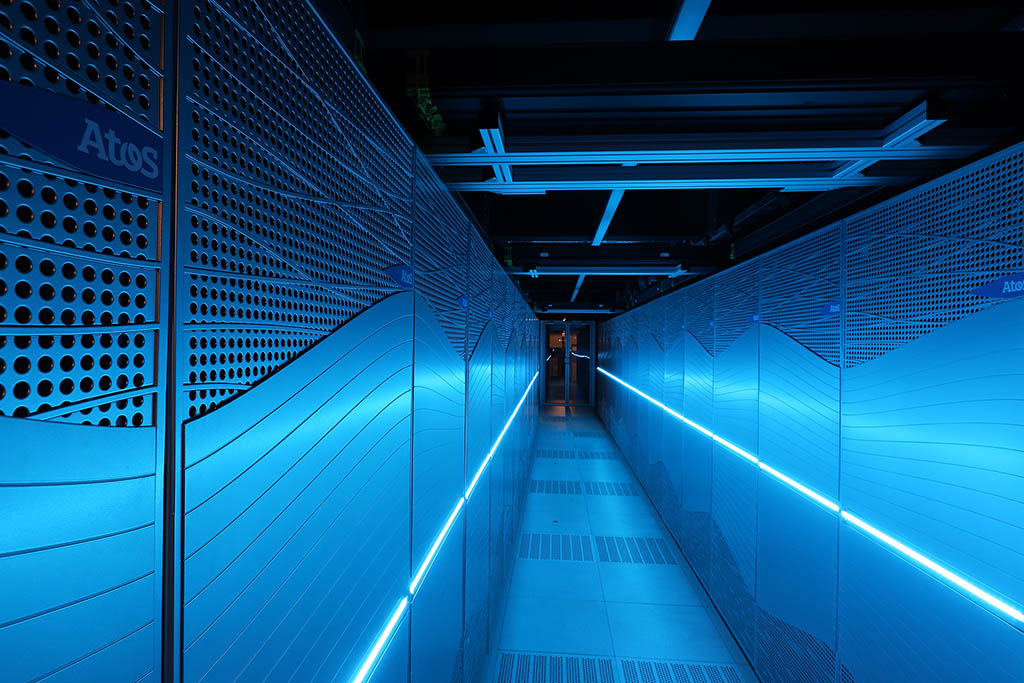

Levante is based on BullSequana XH2000 technology from Atos. The CPU partition comprises 2,832 computing nodes, each with two processors, which together deliver a peak computing performance of 14 petaFLOPS. That's 14 quadrillion mathematical operations per second. The system is equipped with third-generation AMD EPYC processors, each of which has 64 processor cores. The total main memory of the system is more than 800 terabytes; this is equivalent to the main memory of about 100,000 laptops. To cover different classes of requirements, the individual systems that make up the supercomputer have main memory sizes of between 256 and 1,024 gigabytes.

Figure 2: The Supercomputer "Levante" consists of roughly 2900 networked Computers.

In addition to the classic CPU processors, Levante will receive a partition with 60 GPU nodes in summer, which will deliver a peak performance of 2.8 PetaFLOPS. Each GPU node is equipped with two AMD EPYC processors and four NVIDIA A100 graphics processing units (GPUs); 56 of the GPUs with 80 Gigabytes of GPU memory and four nodes with 40 Gigabytes of GPU memory.

This increasingly heterogeneous hardware architecture poses major challenges for scientific software development. The DKRZ will support its users in adapting their workflows (e.g. the porting of program codes or the use of artificial intelligence) to make efficient use of such developments in high-performance computing.

For data transfer between the computer nodes and the storage components, Levante uses NVIDIA Mellanox InfiniBand HDR 200G technology, which can achieve a data transfer rate of up to 200 GBit/s.

To store and analyze the calculated simulation results, Levante is equipped with a storage system of about 130 Petabyte from the company DDN. This means that more than twice the previous storage space is now available. Compared to a conventional laptop with one Terabyte of disk space, the supercomputer achieves around 130,000 times the storage capacity.

The predecessor system Mistral (HLRE-3), also a supercomputer from Atos, was put into operation in 2015 and has helped to consolidate and further expand the internationally excellent reputation of German climate research. For example, the majority of the German CMIP6 simulations conducted in preparation for the new, sixth World Climate Status Report were computed on Mistral. After a transition period of about three months, during which both systems will be operated in parallel to transfer applications and data to the new system, Mistral will be sent into well-deserved retirement.

Financing

On the basis of the financing agreement from November 2017, the project HLRE-4 is funded with a total of 45 million Euros by the Helmholtz Association, the Max Planck Society and the Free and Hanseatic City of Hamburg.