In addition to the theoretical gaps in the understanding of physical processes, limited computer capacities have so far restricted an adequate representation of clouds and precipitation in climate models. The scales on which those processes take place, range from micrometers, e.g. for condensation nuclei, to hundreds of kilometers, e.g. for the representation of atmospheric fronts. Taking into account the influence of all scales in the simulations, small-scale processes have to be represented by assumptions and approximations to the observations to the best of knowledge; they are “parameterized”. Unfortunately, some of the parameterizations cause major uncertainties of the current generation of climate models which than again trigger uncertainties in climate projections of possible futures scenarios. In particular, the parameterizations of convection and precipitation represent processes ranging from a few hundred meters and several kilometers which are poorly understood and poorly implemented.

In the context of HD(CP)2, this problem will be addressed through a combination of simulation and observational sciences as well as computational science using high performance computing systems.

HD(CP)2 is short for High Definition Cloud and Precipitation for Advancing Climate Prediction and follows these overarching objective:

- The improvement of climate forecasts through better representation of clouds and precipitation in current climate models.

- The quantification and reduction of model uncertainties by estimating the influence of inadequate cloud and precipitation parameterizations.

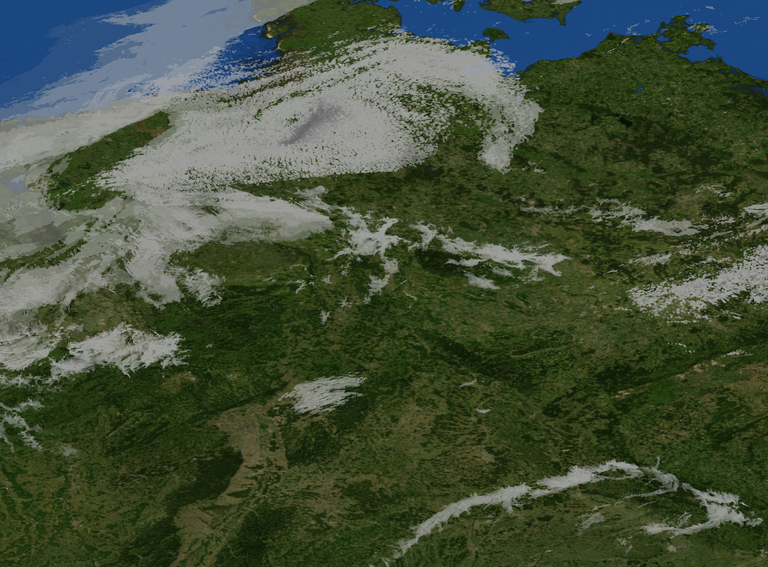

Figure 1 shows a snapshot of the simulated cloud coverage over the central model area in the middle of Germany.

Fig. 1: Simulated cloud coverage on 27th April 2013 using ICON-LES (visualized by N. Röber, DKRZ)

The overall project HD(CP)2 is designed as a three-stage project: In a first step (2012-2015), the infrastructure, which is necessary for high-resolution simulations and their analysis, was created within three modules. In a second step (2016-2019), these infrastructure projects are now being used to tackle scientific key issues within six scientific projects: Cloud processes through aerosols, boundary layer clouds, cirrus clouds, land surface effects on clouds and precipitation, clouds and convective organization and the position of storms in the changing climate. The necessary development of the needed tools is summarized in the project M (modeling) and the project O (observations).

Contribution of DKRZ and scientific applications

The project M is coordinated by DKRZ. This sub-project focusses on the parallelization and optimization of the code, the development of the modeling infrastructure and visualization of huge amounts of data.

The latest generations of high performance computing systems are massive parallel computing platforms consisting of several thousand processor cores and distributed memory. In order to make best use of high performance computing systems, the calculation of the model simulations and the related data needs to be distributed over a high number of CPUs. For the ICON model, which is used for the modeling in HD(CP)², this means that all global data structures have to be replaced by distributed structures and the corresponding algorithms have to be parallelized. Until now, DKRZ has restructured the ICON code in three respects: domain decomposition of the computational grid, implementation of various communication patterns based on MPI (Message Passing Interface) and parallel input of NetCDF data.

DKRZ operates „Mistral“, a bullx B700 DLC high performance computing system that is capable to meet the requirements of HD(CP)² in concern of computing power, memory size and storage capacity.

The enormous demand on computing power and storage is caused by the number of grid points to be calculated and represents the technical challenge of HD(CP)2 to the computer science: If the area of Germany is represented with a horizontal resolution of 100m by 100m, a total of approximately 22 million grid cells must be calculated per layer. In order to represent clouds and precipitation processes adequately, the atmosphere is vertically represented by 150 layers up to a height of 21 km, so that the model covers a total of about 3.3 billion grid cells. To simulate one day of the HD(CP)2 measurement campaign as a model experiment in so-called hindcasts more than 2 million core hours of computing time are required. If 500 computing nodes, each with 24 cores, of the high-performance computer Mistral are used – that is one third of the whole system the simulation of a campaign day requires more than 7 days in real wall clock time.

Although only a part of the data is stored, the total output adds up to about 45 Terabyte per simulated day.

The development of a regional ICON-model with an effective grid width of 100 m (ICON-LES) is completed. For the first time, a global circulation model could be used in a regional setup with a resolution that could so far only be achieved by classical LES models.

As a result, new opportunities for ultra-high resolution simulations of Germany with unprecedented physical realism arise that are being used for a hindcast simulation of a few weeks in April 2013. For the same period, HD(CP)² has acquired observational data in a resolution that have never been achieved before.

During this year, the model area in Germany should be supplemented by additional domains worldwide to completely cover the relevant cloud and precipitation processes. At the same time, the scientific work with respect to long-term climate effects will start in 2016: Experiments with varying CO2, aerosol or land use scenarios for Germany should give with the help of the high-resolution model several possible explanations for model spread.

The further development of online evaluation and diagnostics tools carried out in cooperation with universities and the DLR Oberpfaffenhofen plays a major role for future simulations whose resulting data is too large to be saved completely.

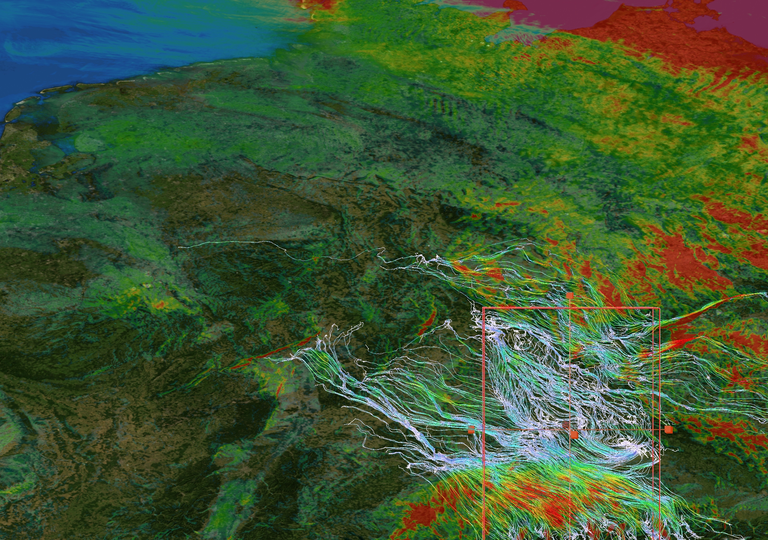

Fig. 2: Simulated wind velocity and single flow lines near the alp mountains using the model ICON-LES (visualized by N. Röber, DKRZ)

Successful restructuring of the ICON model

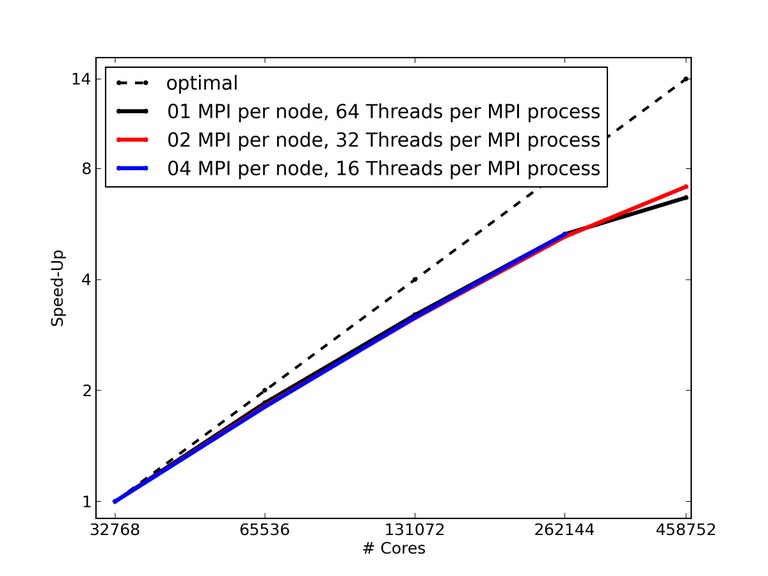

The necessary refactoring of ICON within the framework of HD(CP)2 resulted in a SPMD-parallel program (Single Program Multiple Data) able to running efficiently on extreme scale HPC architectures. Successful strong scaling experiments (scaling tests with large number of processors/cores) in the course of the refactoring of ICON were carried out by DKRZ on the BlueGene/Q system "JUQUEEN". Runtime measurements of ICON-LES with a spatial resolution of 120 m show an excellent scaling up to 458.752 cores (Figure 3). Thereby, only the time which the parallel algorithms need during the calculation was measured - but not the time of writing the results to a file system. Due to the limited memory size of the nodes of JUQUEEN, real production runs with ICON-LES including input and output are not possible on that system. Instead, the ICON-LES production runs are carried out on Mistral at DKRZ, which is available since July 2015.

Fig. 3: Strong Scaling of the ICON model with a grid width of 120 m on Blue Gene/Q system "JUQUEEN" using different number of MPI processes per node and number of OpenMP threads per MPI process.

Visualization of HD(CP)2 simulation on Youtube

Further informationen on the website of the project HD(CP)²: http://hdcp2.zmaw.de

Authors:

Dr. Panogiotis Adamidis, Michael Böttinger, Dr. Kerstin Fieg, Dr. Ksenia Gorges, DKRZ

Dr. Florian Rauser, MPI-M

Contact:

Dr. Florian Rauser, MPI-M (Projektkoordinator): florian.rauser[at]mpimet.mpg.de

Dr. Panogiotis Adamidis, DKRZ (Programmoptimierungen): adamidis[at]dkrz.de