![]() The AIM team at DKRZ supports researchers from various Helmholtz centers in the introduction, evaluation, and practical use of ML/AI technologies, such as artificial neural networks (ANNs) or decision trees.

The AIM team at DKRZ supports researchers from various Helmholtz centers in the introduction, evaluation, and practical use of ML/AI technologies, such as artificial neural networks (ANNs) or decision trees.

In practice, users from the corresponding "Earth and Environment" Helmholtz facilities contact the AIM group with their inquiries. The spectrum of requests varies in complexity, and the workload involved: from answering technical or methodological questions to planning, implementing and executing complex ML workflows.

Artificial intelligence and machine learning methods offer a number of promising opportunities for climate modelling and climate data analysis. The computational effort required to perform climate simulations increases with the resolution of climate models, which in turn is necessary to represent more realistically small-scale processes such as cloud formation. With the help of machine learning methods, on the one hand, model calculations could be carried out with less computational effort and may thus become faster, and on the other hand, the quality of the results could be improved or previously unknown regularities and patterns in the calculated data may be discovered.

To outsiders, the methods often sound like inexplicable magic, also due to striking examples of large companies such as Facebook or Google. However, these often only represent a small special area of the possibilities of artificial intelligence, such as the automatic generation and supplementation of images. The algorithms behind them are complex and require an immense amount of training data and optimization for the intended learning effect to occur. For projections of the future global average temperature up to the year 2100, complex calculations with a sophisticated climate model are required. If a machine learning method is simply confronted with a temperature curve of the past 170 years, it cannot 'magically' deliver the continuation of the curve into the year 2100. It requires large amounts of complex training data and must be developed step by step until it achieves an acceptable quality of results. A typical challenge here is that a model fits too tightly to the given data and generalizes poorly (overfitting).

ML support requests are processed iteratively by the AIM team. First, a model architecture, data processing and operational environment are developed, for example for automated execution and optimization of model parameters through so-called hyperparameter tuning. These are tested in short cycles and the results are evaluated together with the scientists to be supported. Through these cycles, the ML methods are continuously developed until they deliver satisfactory results. The objectives can be manifold, e.g. to obtain increasing accuracy of prediction or regression, to improve or reduce the pure computational power - in this case the time for a training run - but also to compare different methods, e.g. neural networks with decision trees.

After almost one year of its existence, the AIM team at DKRZ covers a wide range of topics in climate and environmental research from different Helmholtz Centers in the requests it handles. In the following, two projects that have already been running for some time are presented, which demonstrate the progressive use of ML methods and their benefits for climate research and show further innovation potential.

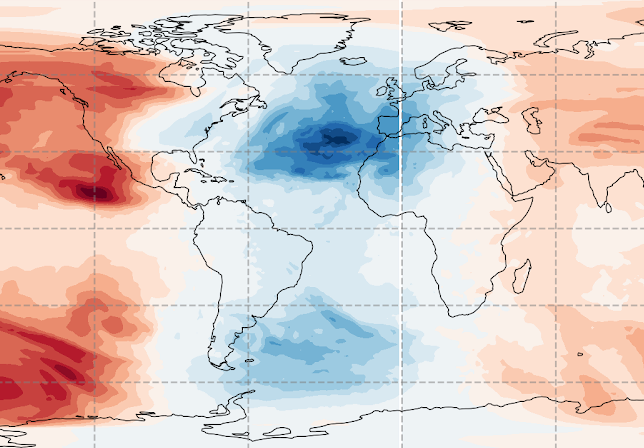

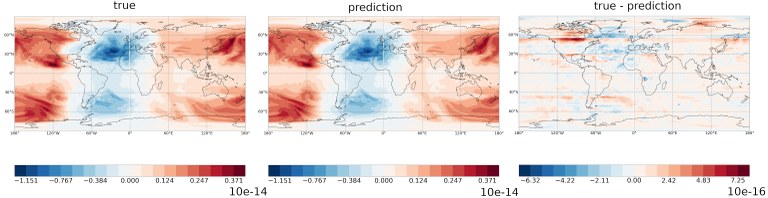

Fig. 1: The prediction of the ML model (centre) compared with the output of the original model (left) for atmospheric HNO3 (nitric acid). The error between the two outputs (i.e., the difference [right]) is two orders of magnitude lower and thus within an acceptable range.

FastChem: Investigation of ML Methodology for Atmospheric Chemistry

Modelling chemical processes in the Earth's atmosphere, such as stratospheric ozone chemistry, is essential to the overall understanding of Earth system processes and changes - both past and future. However, coupled climate models that simulate atmospheric chemistry are very computationally expensive, and are therefore rarely used in scenario calculations.

At the request of the Institute for Meteorology and Climate Research and the Steinbuch Centre for Computing (SCC) of KIT, the AIM team is currently investigating to what extent the output of a model for atmospheric chemistry can be learned and emulated by machine learning, and how the learned ML methods can be integrated into operational climate models in the future. The goal is to minimize the computational time by using an ML method while getting as close as possible to the results of the dynamic chemistry model.

In order to do so, artificial neural networks are used as the ML method. Since an already existing model is to be imitated with respect to its results, the hurdle of a limited availability of training data, which is otherwise common for ML problems, is not a problem in this case: More data (input and output of the existing model) can be generated comparatively easily and in large quantities.

The complexity and peculiarities of the data pose the greater challenge in this case than, say, a complex architecture of the artificial neural network used. For example, the network is relatively simple, contains few layers, and neither does it use layers that process spatial data (convolutional layers) or layers that process temporal patterns (recurrent layers). Rather, the particular challenges are that the data include values from 110 chemical components, all of which may have individual statistical distributions and can be involved in various chemical reactions.

Another challenge is the large amount of data since the training was extended from data of single time steps to time-dependent data for a 3-month period. The amount of data here now exceeds the size of the memory of the graphics processing units (GPUs) available on the DKRZ supercomputer Mistral. As a result, significantly more sophisticated strategies are necessary in order to continue to perform the training with good performance and utilization of the processors. With a view to further improving the quality of the results, it is likely that even more data will be required in the future, so that a fundamentally different approach to the flow of data transfers within the high-performance computer will be necessary. In this context, findings from the second example (see text below) will also become increasingly important.

The results of the first prototypical implementations show that the computations of the dynamic chemistry model can generally be reproduced with an artificial neural network, but still limited to a small subset of chemical components, and that increasing deviations occur over time. Although the training is very computationally expensive, a first assessment shows that after training the network, its integration into an operational climate model does not require a high computational effort. Thus, the original objective seems achievable in principle. However, there is still a long way to go before the network can actually be implemented in an operational model. Although this seems promising, it also goes well beyond the support that can be provided by the AIM team.

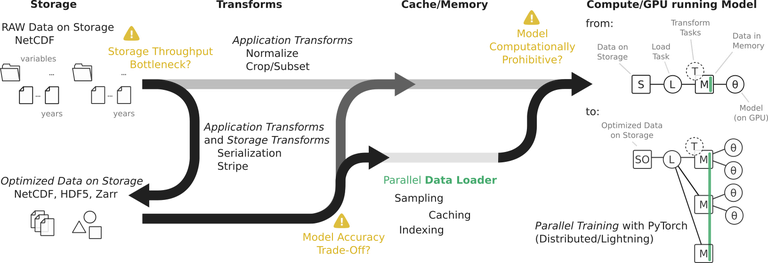

Workflow optimization and benchmarking for high-resolution and large-volume climate model data

As in the first example, the goal is to develop a long-term perspective for the application of ML techniques in climate modelling, such that artificial neural networks (ANNs) are brought together with an existing coupled climate model and used with it in the context of simulations on high-performance computing (HPC) systems. One of the technical challenges that arise from such deployment scenarios concerns the optimal use of expensive GPU nodes. How can GPU nodes be optimally fed with data so that they have no idle time and computing resources are optimally used? This question arises both when training ANNs with large amounts of climate data and when coupling climate models with ANNs over the long term, as described in the previous example. The problem with using ANNs is that their training requires querying, almost at random, very small amounts of data from datasets that are many orders of magnitude larger. Because of their size, these data are stored on hard disks. The path of the data to the GPUs thus leads through several steps in the HPC system: after being loaded into the main memory of a computer node, they must be transferred to the memory of the GPU, similar to a pipeline with several intermediate stations. All these stages have, on the one hand, a limited throughput in the volume of data that can be transferred per second and, on the other hand, there are limitations in the total amount of data that can be stored. Consequently, to optimize the throughput of the GPUs, the various sections of the data path must also be optimized. To do this, it is necessary to first measure what bandwidth is achieved at the different sections and what limits it when the GPU is performing different tasks, so that the different optimization goals can be weighed against each other.

Fig. 2: An overview of the different steps from raw data (left) to training data that can be used to train a ANN (right). Also highlighted are potential optimization goals to be weighed due to limited resources such as memory or computational throughput.

Specifically, in the context of this request from the Institute for Coastal Research at Helmholtz-Zentrum Geesthacht (HZG), typical access patterns of an ANN were systematically tested including deployments on Mistral: from the GPUs on which the code runs to the hard disks where the required data reside. The use case used here was the WeatherBench dataset (Rasp et al. 2020), which is representative of typical climate model data and is well prepared and documented for such benchmarking. Apart from running the benchmarks, code building blocks for building and running such a complete data pipeline were made, as well as recommendations for possible optimization of similar problems.

One finding from this process is that the biggest obstacles often lie in the near random access to data on the disks. There are several strategies to deal with this. For example, data loading could be offloaded to dedicated nodes to take advantage of the comparatively fast network connectivity between processor and GPU nodes combined with less random feeding of the network. A scientific publication on this is in preparation.

The presented support by the AIM team at DKRZ is available to users of the Helmholtz Association free of charge due to funding by Helmholtz AI. For all other interested parties, DKRZ offers general advice on these topics.

Authors:

Dr. Tobias Weigel, Jakob Lüttgau, Dr. Caroline Arnold, Dr. Frauke Albrecht, Felix Stiehler

Contact:

Y29uc3VsdGFudC1oZWxtaG9sdHouYWlAZGtyei5kZQ==

Weblinks and further information:

- Detailed information about the AIM support team: https://www.helmholtz.ai/themenmenue/our-research/consultant-teams/helmholtz-ai-consultants-hzg/index.html

- Support request can also be send directly to Helmholtz AI: https://www.helmholtz.ai/themenmenue/our-model/funding-lines/voucher-system/index.html

- Helmholtz AI wow! videos: video series in which scientist expalin the key terms of AI in less than a minute erklären: https://vimeo.com/helmholtzai/videos

- Helmholtz AI: https://helmholtz.ai/

- Junior Research Group at HZG: http://m-dml.org/

Literature:

Rasp, S., Dueben, P. D., Scher, S., Weyn, J. A., Mouatadid, S., & Thuerey, N. (2020). WeatherBench: A benchmark dataset for data-driven weather forecasting. ArXiv:2002.00469 [Physics, Stat]. http://arxiv.org/abs/2002.00469