Scalable Earth System Models

gefördert vom BMBF (Förderkennzeichen: 01IH08004E)

Partner:

- Deutsches Klimarechenzentrum GMBH (Koordinator)

- Alfred-Wegener-Institut für Polar- und Meeresforschung

- IBM Deutschland GmbH

- Max-Planck-Institut für Chemie

- Max-Planck-Institut für Meteorologie

- Universität Karlsruhe (TH)

THIS TEXT IS AVAILABLE IN ENGLISH ONLY

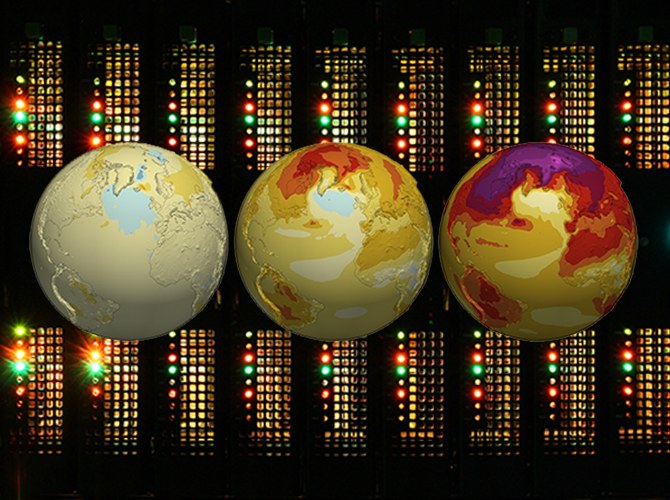

Models for climate and earth-system simulation (earth system model, ESM) do currently show a multitude of code structures that were very efficiently computed on architectures of the past, where only few very powerful CPU cores delivered results. Such models are now and increasingly will be coupled in complex multi-model simulations to be run on parallel HPC systems with 1000 up to millions of cores. Unfortunately many models do not yet run efficiently at such a scale.

An example for such models are those used for the last IPCC report. Since these models are needed in the creation of climate scenarios and climate predictions, which in turn form the basis for decisions in many contexts of our society. One example for the dissemination of those results is the Climate Service Center established by the German federal ministry of education and research. To meet these targets, improvements of future model generations with respect to accuracy and complexity are indispensable. These improvements are heavily reliant on efficently scaling the models to high CPU counts.

The ScalES project was conceived as a means to identify and solve scaling problems common to many model codes in an examplary fashion for a coupled ESM. A central commitment of the project is the establishment of a prototypical, flexible and highly scalable ESM of production quality. A special focus will further be the conception and implementation of generic library components that will be useful as well for other ESM as completely different disciplines of research.

The results obtained from the ScalES-project are linked here: https://www.dkrz.de/redmine/projects/scales-public/wiki/Wiki

Software Components

Parallel I/O library and I/O servers

Data transport and storage in todays earth system models is increasingly time consuming due to its essentially serial implementation and continually increasing parallelization and resolution. To fully exploit state-of-the-art parallel filesystems like GPFS or Lustre, the model I/O needs to be restructured and parallelized. This projects aims to develop such an implementation and make it available in a library covering multiple libraries.

Parallelization and Performance modules

To mitigate both dynamic and static load imbalance a collection of partitioning routines will be implemented beneath a common interface. Additionally helper routines for associated phenomena in parallelized programs will complement this core with e.g. free-form boundary exchanges.

The library can be inspected at the dkrz project server: https://www.dkrz.de/redmine/projects/scales-ppm

Communication library UniTrans

Many earth system models use hand written code to implement the decomposition of a global simulation domain into smaller parts which are then distributed among the available processes. Depending on the kind of evaluated physical quantity each part often needs to access data from neighboring parts which leads to inter-process communication - mostly expressed with the MPI communication library.

UniTrans is a library, currently layered on top of MPI, and offers a flexible and general concept to describe decompositions and the transition between them. This includes, e.g., global transposition as required in ECHAM or local bounds exchange as used in MPIOM. The concept simplifies the development of model communication and relieves the models from machine dependent communication performance aspects. The UniTrans libray was implemented by the project partner IBM. The pending release process is expected to result in an open source library soon.

Improvements of OASIS 4 coupler

One of the core features of modern ESMs is the coupler, which handles the communication between the individual climate components. It has to be easy to use, allow the easy exchange of models and run performant on different computer architetures. The coupler OASIS4, which is used in this project, is one of the standard tools in the climate research community.

Solver for ocean level with machine targeted parametrization

Improvement of the current solver by implementing the cg method and additive Schwarz method. Usage of hybrid parallelization to exploit different hardware architectures more efficiently. The solver is included in the scales-ppm library.

----

Das diesem Bericht zugrundeliegende Vorhaben wurde mit Mitteln des Bundesministeriums für Bildung, und Forschung unter dem Förderkennzeichen 01IH08004E gefördert. Die Verantwortung für den Inhalt dieser Veröffentlichung liegt beim Autor.